IMAGE: Namibia Fact Check

The digital threats that have been seen around elections elsewhere are rearing up ahead of Namibia’s November 2024 elections

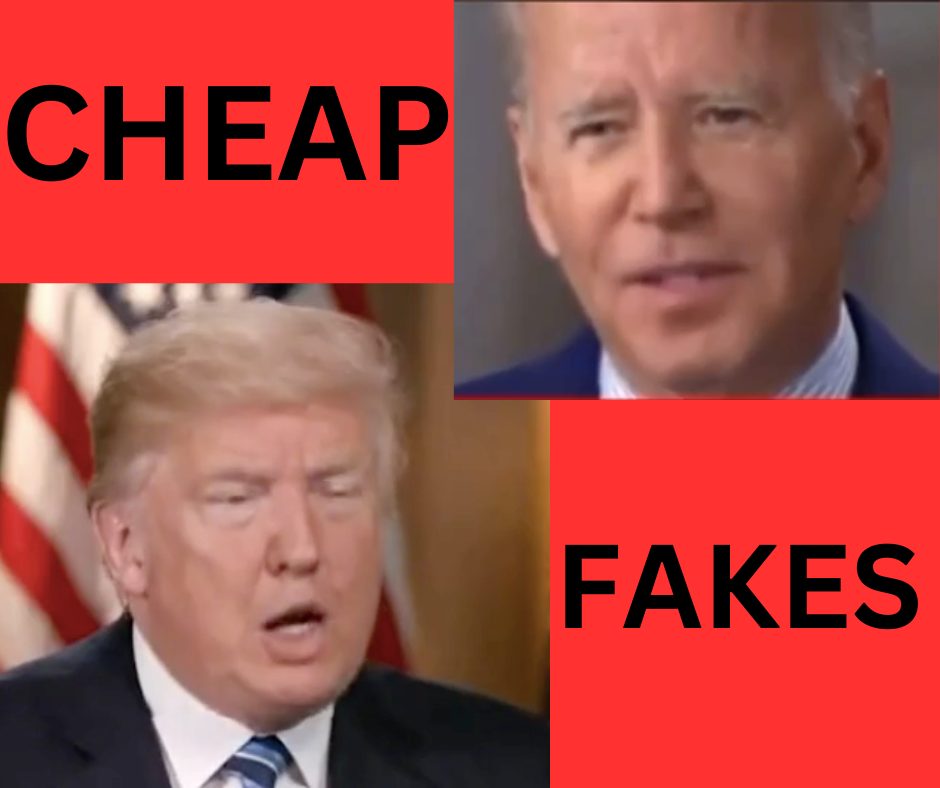

NOTE: This article has been edited to substitute “deepfakes” for cheapfakes in the image, headline and other parts of the text.

With a third of the world’s countries having or having already had elections in 2024, artificial intelligence (AI) and deepfake generating technologies have risen as threats to political and electoral processes.

“Global liberal democracy faces a near unprecedented list of digital threats in 2024 as the increasing exploitation of AI and the rampant spread of disinformation threaten the integrity of elections in more than 60 countries. And we are woefully unprepared.”

– Tom Felle, Associate Professor of Journalism, University of Galway, writing in The Conversation

Namibia will probably also see AI-generated deepfakes – whether video or audio – appear on the political landscape as the country moves closer to the elections.

In fact, Namibian politics-related AI-generated fakes have already started circulating in Namibian social media spaces, and even though these early examples come across as rather crude, they point to what could be coming.

“Generative artificial intelligence (GAI) adds a new dimension to the problem of disinformation. Freely available and largely unregulated tools make it possible for anyone to generate false information and fake content in vast quantities. These include imitating the voices of real people and creating photos and videos that are indistinguishable from real ones.”

– Generative AI is the ultimate disinformation amplifier

The Donald Trump clip

In mid-March 2024 a video clip of former US president Donald Trump talking about blowing up Namibian uranium started circulating in Namibian WhatsApp and other social media spaces.

The clip, which appears to have primarily been shared in Namibian WhatsApp groups through March and April 2024, was created using a free online AI voice and video generator, Parrot AI, which allows the user to convert text to speech and to use an American celebrity voice to say what the user wants them to say.

The audio-visual clip of the former US president was taken from a May 2017 interview conducted by Lester Holt of the US’s NBC News network about the then US president’s firing of the head of the country’s Federal Bureau of Investigation (FBI).

In the election context, the Parrot AI voice generator of Donald Trump has been used to encourage Pakistani-Americans to support the former US president’s campaign for a second term in the US presidency, which will also be decided by elections in November 2024. In this instance the clip was used to show Trump encouraging Americans of Pakistani origin to vote for him so that he could set jailed former Pakistani prime minister Imran Khan free once elected. The clip had to be debunked because it went viral in Pakistani social media spaces in March, following elections in the country in February 2024 in which Imran Khan’s party did very well, even though he was in jail.

The Joe Biden clip

In mid-April 2024, a similar video clip of current US president Joe Biden also started circulating in Namibian WhatsApp and other social media spaces.

While it’s unclear what the original source is of the Joe Biden audio-visual fake, the US president’s voice is used in this instance to mention the oil discoveries off the Namibian coast over recent years and to express “full support” for the ruling Swapo Party and its candidate for Namibia’s presidency, Netumbo Nandi-Ndaitwah, in this year’s elections.

The Biden clip also appears to have been generated using the Parrot AI application, even though it does not have the Parrot AI watermark on it. An online image search reveals that the Biden voice generator has been extensively used recently to say a variety of things.

“Elections all over the world are endangered by a vast array of sophisticated digital threats. This year, when more voters head to the polls than ever before, AI-driven deepfakes threaten electoral processes everywhere, with potentially disastrous consequences for at-risk democracies.”

– Global Investigative Journalism Network (GIJN)

What these examples show is how free or cheap online AI deepfake generators can be used to create political messaging to influence elections, by stoking tensions and divisions. Committed and motivated political actors can use such AI content generators – mimicking the voices of politicians – to sow confusion and even incite violence on the electoral landscape.

To get a better understanding of the AI-generated misleading or fake content issue and how to spot such disinformation, read this article by authors from WITNESS, an “organisation that is addressing how transparency in AI production can help mitigate the increasing confusion and lack of trust in the information environment.”